Genome Jazz

2020-08-31 composition science!

The COVID-19 pandemic has made everybody interested in virology, and molecular biology more generally, and one of the cool things about living in the Future is that genome sequences have become accessible. Where it used to be the work of a person's entire career to maybe obtain just a fragment of the code for a single gene, now there are machines that can obtain a complete sequence for a sample in just hours or days. And unlike a lot of other scientific data, it's become the usual practice for gene sequences to be shared. Any ordinary person, not just a capital-S Scientist, can just go on the Web and download the sequences for genes. So here's one of my lockdown projects: using that public genome data for sonification.

The genetic information of a living cell is typically stored in one or more molecules of deoxyribonucleic acid (DNA), which are long chains of sugar/phosphate units; at regular intervals along the chain, there are bases attached, and those come in four different kinds represented by the letters A, C, G, and T (for "adenine," "cytosine," "guanine," and "thymine"). The sequence of the different bases records information about how to build proteins. Cells contain general-purpose construction systems that build proteins to maintain the cell, and even build new cells, according to the instructions encoded into the DNA.

I thought it would be cool to translate DNA sequences into music, especially if we could hear some of the properties of the protein the sequence codes for, in the music. For instance, a lot of proteins embed themselves in biological membranes, anchored by hydrophilic segments that are attracted to the "oily" membrane interior while the hydrophilic parts of the protein stick out into the surrounding liquid. So the sequence in the protein chain is alternating blocks of hydrophobic and hydrophilic amino acid residues that cause the protein to fold into the proper shape and then stick in the membrane. Could we make that audible? Could we make other structural aspects of the DNA audible in the structure of the music? Everybody's interested in SARS-Cov-2, the virus responsible for the pandemic; so could we make music out of that and would it sound interesting?

I spent a lot of time playing with DNA sequences and different ways of translating them to music. SARS-Cov-2 doesn't actually have DNA - it is an RNA virus, with its genome encoded in ribonucleic acid (RNA) instead of DNA - so I had to switch to looking at RNA sequences if I wanted to cover the virus, but that's a relatively minor detail. RNA is written in basically the same code as DNA, with the substitution of uracil (U) for thymine (T). More complicated DNA-based life uses RNA too; there's a process by which the DNA code is "transcribed" into messenger RNA, often the messenger RNA is subjected to some editing, and then the result is "translated" into proteins.

In both RNA and DNA sequences, the bases are read in groups of three, and each of the 64 possible groups is a command to the protein-assembling system, either to add a specific amino acid to the protein, or to start or end the assembly.

It seems like it'd make sense to just translate each base into a note and write it in 3/4 time to line up with the triple rhythm of the genetic code. A, C, and G happen to be note names already, so we just need to do something with the T/U code letter. I picked the note D to represent thymine or uracil, because it fits musically with the others: C, G, D, A are consecutive in the circle of fifths. So a first attempt at making music out of an RNA sequence might go something like this.

That didn't work well. It didn't really sound like anything except a random sequence of notes chosen from a small set. It got boring really fast, and that was especially a problem because many interesting gene sequences are long, often thousands of bases. I get tired of stuff like the above within a few seconds, and I don't want to generate pieces many minutes long that nobody will want to listen to.

So my next thought was to map the four letters of the RNA code onto musical intervals instead of musical notes. If, say, A means "play a note that is a perfect fifth up or down from the previous note," U/T means "play a note that is a major or minor second up or down from the previous note," and so on, then there's some flexibility on which notes to play while still having the music stongly influenced by the genetic code. I wrote software to choose the undetermined points, like whether to go up or down and whether to use major or minor intervals, according to musical goals like keeping the melody interesting and staying in (or near) a chosen key. In this example, the code is U - major or minor second; G - major or minor third; C - perfect fourth; A - perfect fifth.

Although I spent a lot of time on RNA sonification I'm not going to give any audio samples because they all sounded bad, and I ended up throwing them out and shelving the whole project for a couple of months. Among other things I worked on the Middle Path VCO instead.

One interesting thing I do want to mention on RNA sonification is that part of the reason I had a hard time linking music to biology was that the biology is messed up. Messenger RNA sequences such as I was using, do not actually get translated directly to proteins. They get edited first. In organisms like humans the edits can be pretty extensive; there are entire chunks called "introns" that routinely get spliced out of the mRNA before it goes to translation. Viruses are usually simpler on that point. But coronaviruses in particular have a thing called a "programmed -1 frameshift." Some sequences are harder for the cell to translate into proteins than others, and when the translation complex hits one of those sequences, it "pauses." Sometimes it randomly falls apart (the mRNA dissociates from the ribosome) at that point. Other times, it starts translating again but skips backward one base in the sequence. So one of the bases gets read twice; two amino acids come from five bases instead of six.

The crazy thing is that in coronaviruses when that happens, it's actually not an error. The virus uses it for regulation of expression. The proteins encoded before the pause are ones that the virus needs in larger quantities; because the translation often stops at the pause, proteins encoded after it are produced in smaller quantities. And the double-read of the base where the frameshift occurs is needed in order to have the sequence after that code read in the proper sense. So I thought it would be cool to have the frameshift be included in the music, maybe as a couple of odd-length bars in the middle of the prevailing 3/4.

When I came back to the sonification project after leaving it on "pause" for a while, I made a frameshift of my own: I started looking at protein sequences instead of RNA. I realized that it's really the proteins that are interesting, biologically. The features I really wanted to be able to hear in my music are features of the final protein, and if I try to sonify DNA or RNA, I'm forcing the listener to listen for those features through the layer of translation from genetic code to protein sequence, as well as whatever wacky editing the cell is going to do on the messenger RNA before translating it. I did try using post-editing mRNA sequences, but those aren't what's easy to get from the gene databases, and in the case of stuff like this coronavirus frame shift, I'd have to construct an hypothetical sequence of "what the ribosome reads, by deliberate mistake, even though no molecule with that literal sequence ever actually exists" and that seemed philosophically distasteful. Many of these problems go away if I make my music from protein sequences instead of the RNA that generates them.

Proteins are long molecular chains, too, but they're made of units called amino acids (formally: amino acid residues) and there are many more than four choices for those. There are basically 20 standard amino acids in proteins, plus a couple of exceptional ones not included in the standard genetic code. And they can be said to have meanings. Whereas a cytosine in an RNA sequence basically just means "two bits of some six-bit code word for some amino acid residue," a cysteine (note, not the same word) in a protein sequence means "this part of the protein is hydrophilic and has a thiol group hanging off it, which means it can form a cross-link that affects the folding of the protein in a certain way." If we can turn each amino acid into some kind of a musical concept, maybe the structure of the protein would be perceptible in the music in some way.

If I just wrote a chunk of music, say one bar, for each of the 20 standard amino acids, then I could guarantee that each one would sound okay, but just playing them in sequence would get boring really fast. Instead, what I came up with was a constraint-based approach. For each amino acid, I defined a rhythm for the melody, and I based those rhythms on Morse code, modified a little to fit better with the kinds of rhythms that work in music. For instance, the amino acid sequence serine, glycine, aspartic acid is SGD using the standard letter code for amino acids, and in Morse that is "... --. -.." (dididit dadadit dadidit). Translating dots to eighth notes, dashes to quarter notes, and the gap after each letter to an eighth-note rest, it forms a musical rhythm like this.

This lines up with the standard timing of Morse code if we count the single-unit space after each element as part of the element, and each time unit is a sixteenth note. A dot in Morse is one unit of mark followed by one unit of space (total two units, one eighth note); a dash is three units of mark followed by one unit of space (total four units, one quarter note); and the inter-letter space not counting the one unit that was already counted in the previous dot or dash is two more units of space (one eighth rest).

But doing it that way has the problem that the timing is irregular. I wanted each amino acid to occupy the same amount of time. That meant stretching and shrinking the timing to make each one occupy a single bar of music. I chose a 12/8 time signature to have lots of convenient divisors, but it still meant adding some tuplets.

Then I made up constraints on pitch for the melody notes corresponding to an amino acid. For example, lysine has the abbreviation K, which is -.- in Morse, and I defined these constraints:

- The first note is at least a minor third (three semitones), no more than a major sixth (nine semitones), and not exactly a tritone (six semitones) up or down from the last note of the previous bar.

- The second note is between a perfect fourth and a perfect fifth down from the first.

- The third note is at most one semitone away from the first note.

There are many possible ways to choose three notes meeting those conditions; which one will be used isn't specified yet. The idea is that if there are many bars of music meeting these conditions in a piece, they will all sound similar, similar enough that you may recognize them as somehow the same motif, but they won't necessarily be identical. If two parts of a protein are hydrophilic and each have a lot of lysine in them, they may sound reminiscent of each other; and they'll sound different from a section that might be hydrophobic with a lot of glycine and valine instead.

Then I added a bunch of other contraints designed to make the result more musical, and to try to bring in biological information about the functions of the different amino acids. In particular, I specified that each bar of the music had to be in some major or minor key. For each amino acid, I chose two pitches (a minor third apart for hydrophobic amino acids, a major second apart for hydrophilic) and required that the keys should be chosen for each bar so that those two pitches would be in key, even if they didn't happen to occur in the melody. Other than that, there should be as little movement of keys from bar to bar as possible. I specified that there should be a bassline that would go up and down whatever was the current scale, in a fixed rhythm of three half notes per bar - thereby suggesting the triple rhythm of the DNA/RNA code and creating some polyrhythmic effects.

The resulting collection of constraints was about 670 lines of messy ECLiPSe-Prolog code, which I don't plan to share in its entirety, but here's a sample.

amino_acid_constraints_i(lysine,[X,Y,Z],TZ,[A,B,C],O):- TZA#::[-9,-8,-7,-5,-4,-3,3,4,5,7,8,9],TZA#=A-TZ, B#>=A-7,B#=<A-5, C#>=A-1,C#=<A+1, ok_int(X,A), ok_int(Y,B), ok_int(Y,C), ok_int(Z,C), O#=(A#<84)+(B#<84)+(C#<84).

That code describes, in formal terms that the computer can work with, the rules for note pitches for a bar representing a lysine residue. TZA is a variable representing the difference in pitch in semitones between the first melody note of this bar and the last of the previous bar; its magnitude has to be in the range 3 to 9, but not 6, and it can be positive or negative. Those are the rules I mentioned earlier. Similarly, the second note (variable B) has to have the stated relationship to the first, and so on between the third and first. The "ok_int" lines force each melody note to form a consonant interval with the bass note or notes that it plays above. And the last line calculates part of an objective function (hence the variable name "O") which encourages notes not to be too high-pitched without putting a hard limit on them.

It took a lot of interactive trial and error with the constraint solver, finding constraint rules that could be solved and would actually produce reasonably musical output, but I eventually got something I was satisfied with. Here's a typical bar of the output. This is lysine again; note how the melody in the treble matches the constraints described above.

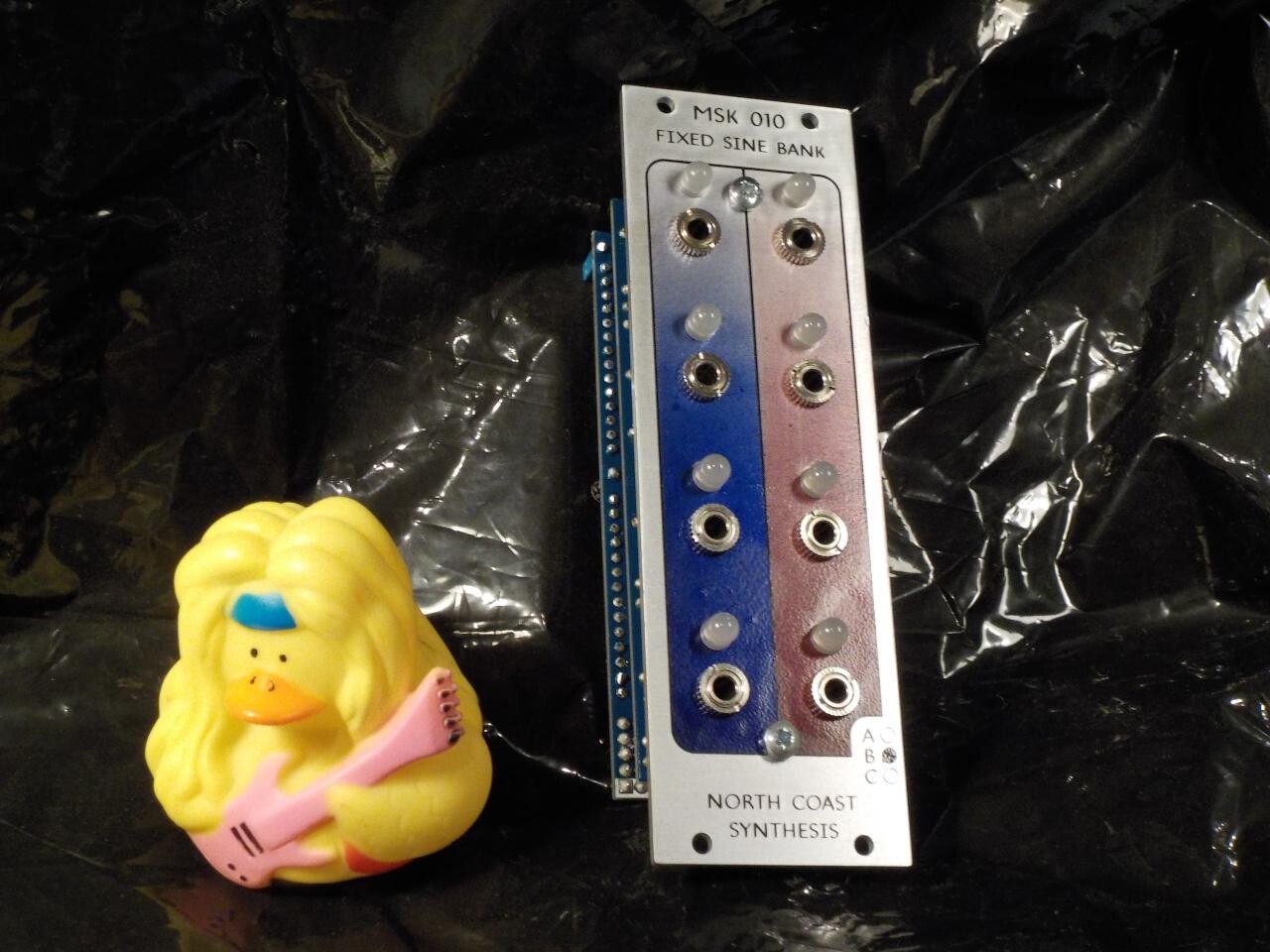

I put it all together and filled in some more details, and I managed to create MIDI files of music for several protein sequences. I mostly picked short ones so that the resulting music wouldn't tax the listeners' patience too much. I rendered the MIDI on my modular synth using my own modules - in particular, the new MSK 013 Middle Path VCO, with a couple of different patches including one where I took output from the oscillator through a Coiler VCF and then back into the oscillator's waveshaper.

Here's a short one: the human beta-amyloid 42 protein fragment associated with Alzheimer's disease. The code for this is DAEFRHDSGY EVHHQKLVFF AEDVGSNKGA IIGLMVGGVV IA, and the repeated letters near the end are quite audible.

The "Green Fluorescent Protein" is something from jellyfish that has been used in a lot of experiments because it's easy to detect when it is being expressed (the cells light up), so experimenters can link it to another gene and find out when that other gene is being expressed. It makes a somewhat longer song.

Although I started out aiming at the SARS-Cov-2 virus, I didn't end up generating any tracks I really liked from its genome. Here is probably my best related one: the famous "spike" protein from the SARS "classic" virus (not the current pandemic, but closely related). This piece is almost 25 minutes long and I'm not sure listening to it will really give you a better understanding of how the virus infects cells, but at least it's a new way of presenting the biological data.

For more samples of music created by sonifying protein sequences, and download links of these ones, see Genome Jazz on the North Coast Synthesis audio server.

◀ PREV MSK 013 release || PCB design mistakes NEXT ▶